NVIDIA

Today, we talk about NVIDIA, which recently announced its Q3 earnings, along with a slew of new software tools to pursue more addressable markets.

The Data Center Business

NVIDIA is a company we have discussed in the past (for a primer on NVIDIA and the semiconductor landscape, read this). It is a company that best exemplifies the combination of luck and management skills to take advantage of said luck. The luck component came when researchers discovered that programmable GPUs (graphics processing units), traditionally used for PC gaming, are really good for machine learning applications. This represented a massive increase in NVIDIA’s total addressable market.

NVIDIA’s GPUs are particularly good for machine learning applications because: (i) they have thousands of cores that can do highly parallel workloads, and (ii) NVIDIA writes a really good software platform (CUDA) that makes their GPUs easily programmable by others (an integrated offering).

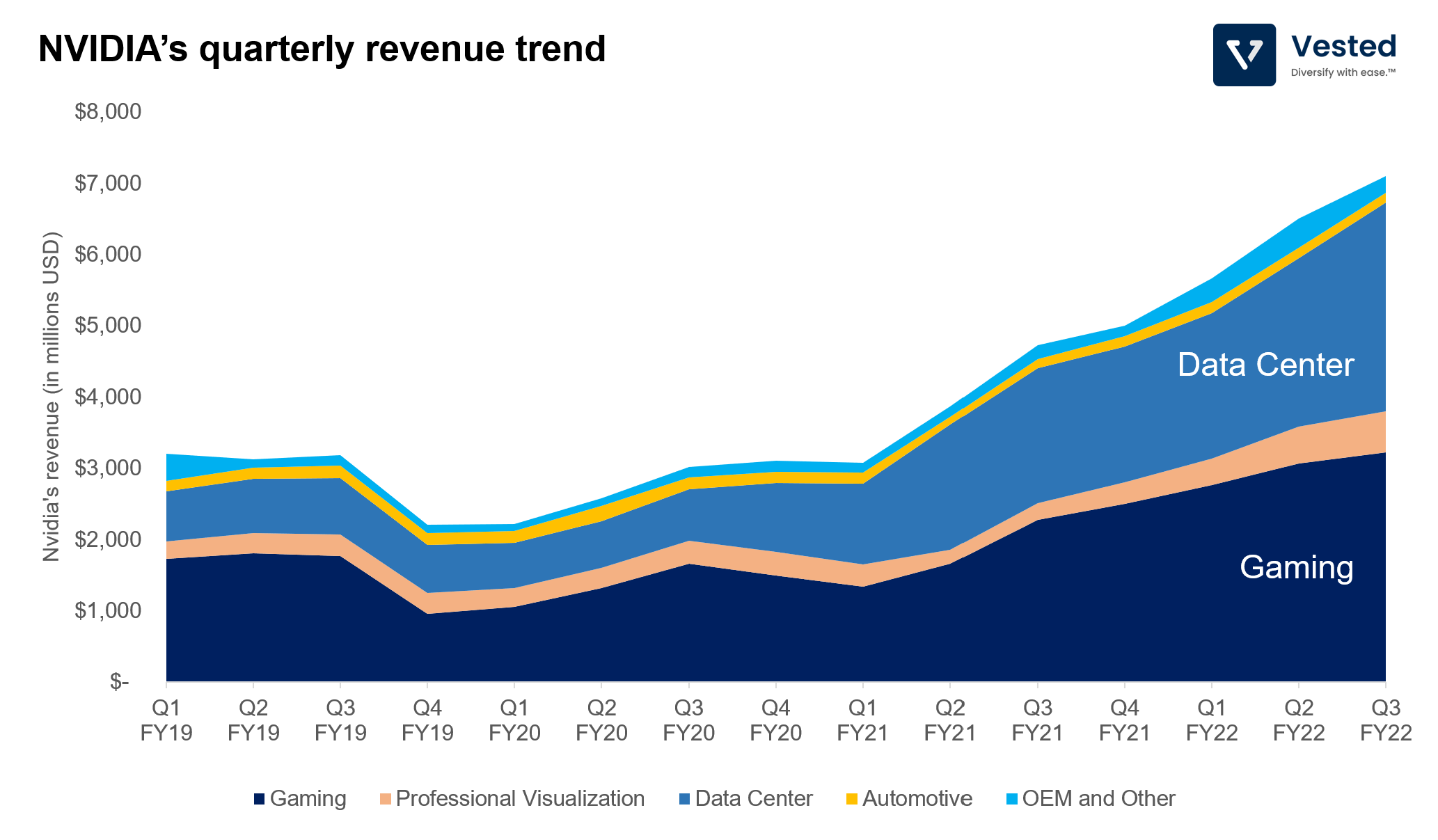

Over the past decade, NVIDIA seized the opportunity and continued to double down on development of its software and chips for machine learning applications. You can see this through expansion of their non-gaming revenues.

Over the past few years, NVIDIA has grown revenue from its machine learning product (sold primarily to data centers) to be as big as that from gaming. The company recently announced its quarterly earnings. In the third quarter, the company generated $3.2 billion from gaming (up 42% year-over-year) and $2.9 billion from data center sales (up 55% year-over-year). See Figure 1. All these, along the backdrop of a supply chain constraint that makes NVIDIA’s product really hard to get (it’s almost impossible to buy NVIDIA GPUs – so much so there’s a live streaming youtube channel that checks real-time availability of NVIDIA’s product).

For the next phase of its growth, the company is betting even more on its integrated software/hardware combo. In the first phase of machine learning development, developers supply large quantities of examples to train a machine learning model (for example, GPT-3, a state-of-the-art language model was trained using hundreds of billions of words scraped from the internet). Good thing the internet is full of words (and pictures). But what if you want to develop machine learning capabilities for something where training data is very hard to come by and hard to label?

That is where simulation comes in.

Simulating the world

If the previous paradigm is using GPUs to train machine learning models by feeding them large amounts of labelled training data, the next paradigm is to simulate the physical world (using GPUs) to generate the training data, in order to train new models (also using GPUs). We’ve started to see this development in the context of self-driving development. Tesla does this to augment its fleet data (see Figure 2). Waymo does this, too.

NVIDIA offers self-driving simulation software as well. And in a recent presentation, NVIDIA laid out its product roadmap to expand this simulation capabilities to model everything. The company is creating specialized software tools and libraries that allow developers to more easily develop their simulations using NVIDIA’s GPUs.

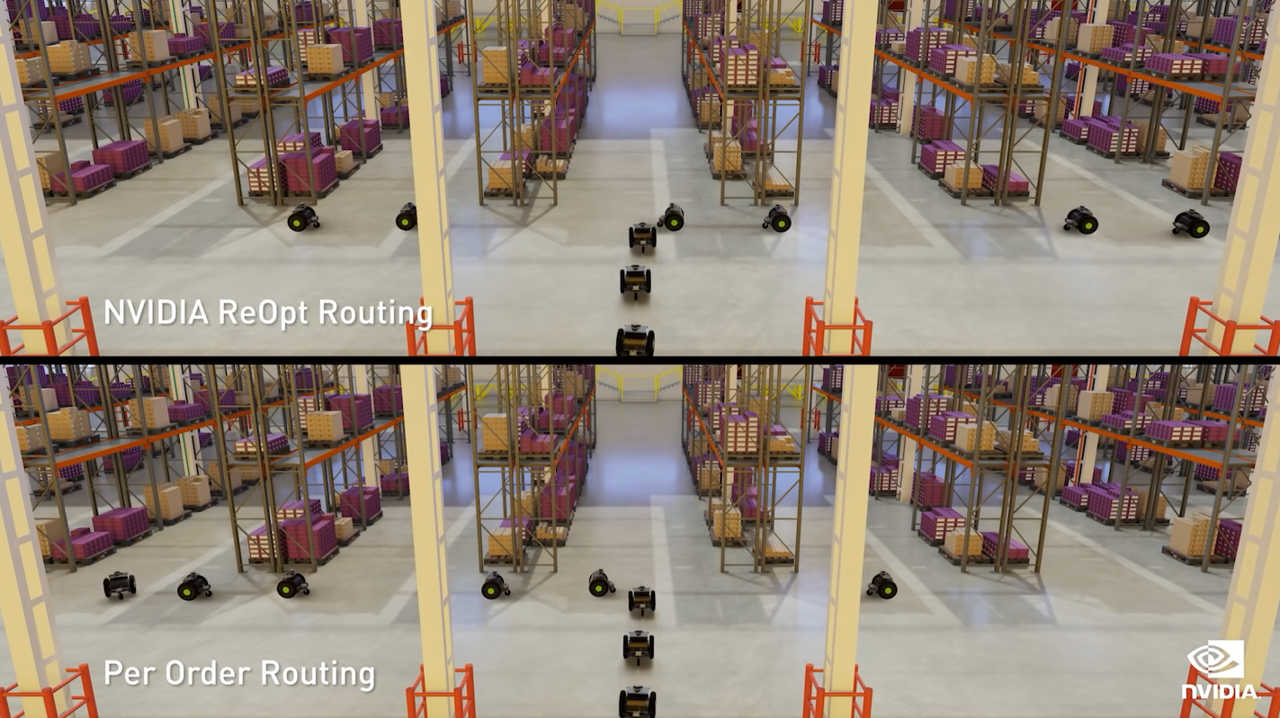

For example, do you need to find the most optimized pathway to reduce cost and pick up time in your robot-filled ecommerce warehouse? There’s a solution for that.

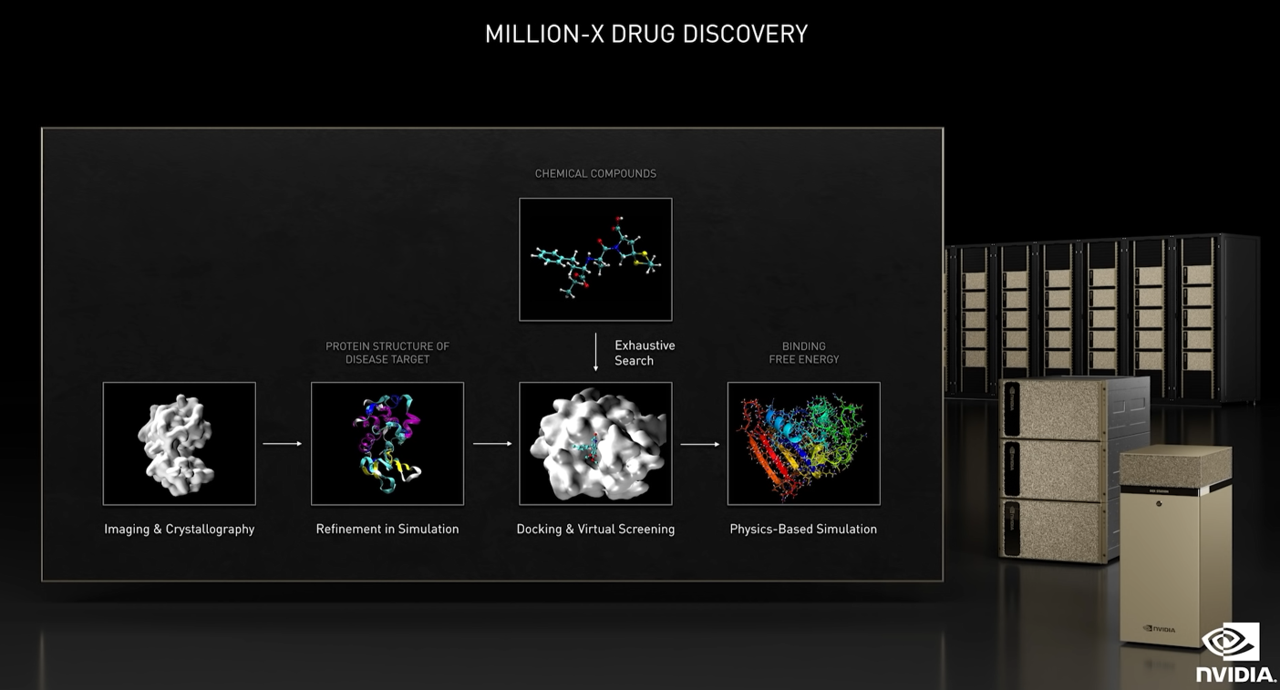

Need to model protein folding to accelerate drug discovery? There’s a solution for that too.

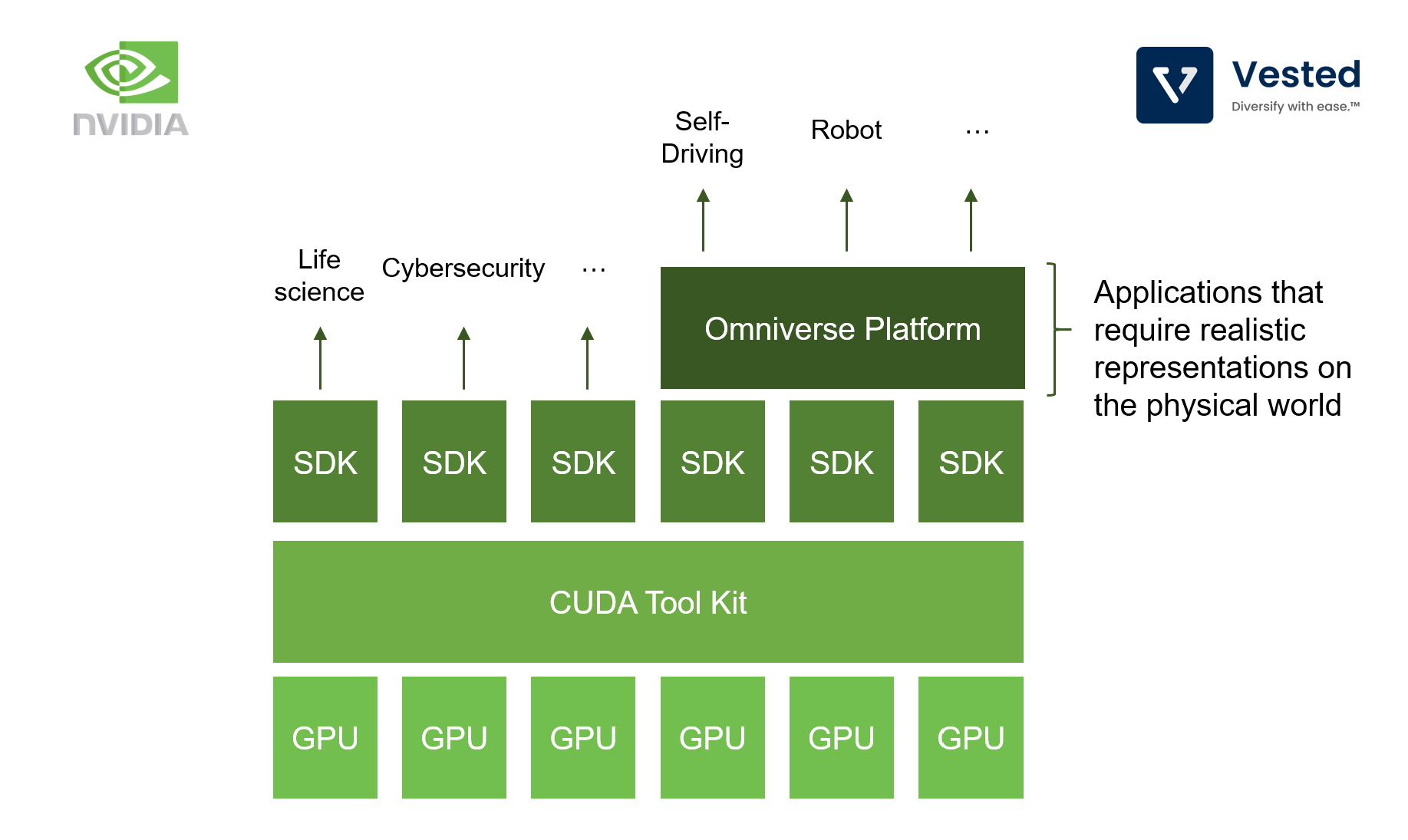

To expand this capabilities to model everything, NVIDIA’s product stack is evolving as follows (from the bottom to the top):

- NVIDIA designs GPUs and ancillary products that help accelerate large data processing

- It refines its CUDA platform to make its chips programmable

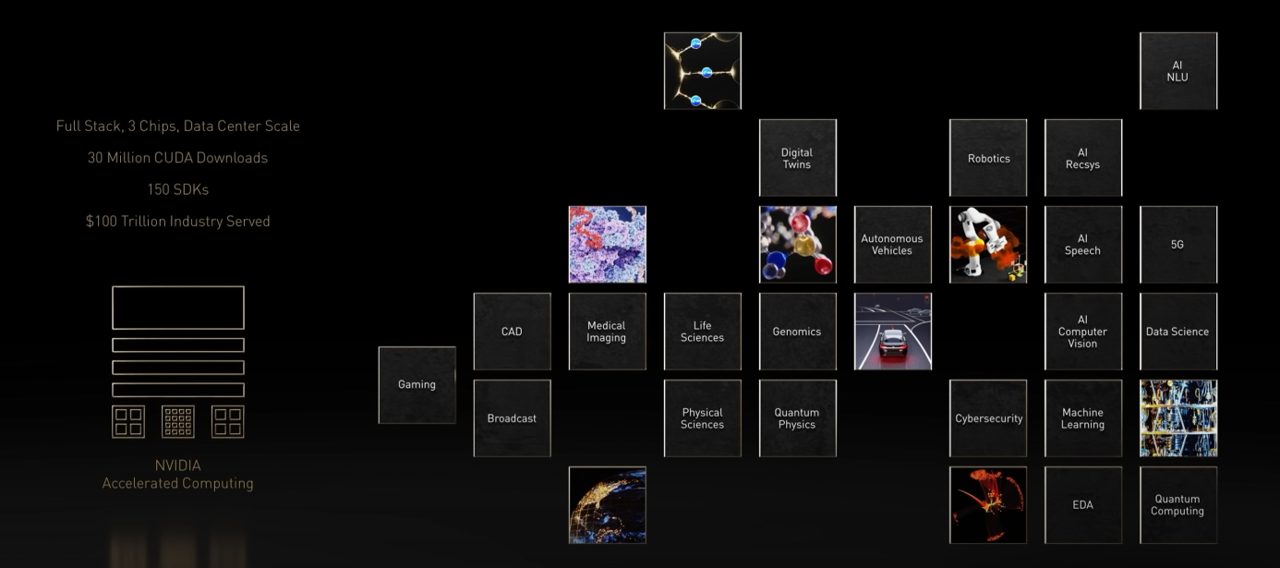

- It releases libraries (more than 150 SDKs) and software tools for different vertical applications (life sciences, quantum computing, cyber security, etc.)

- And for applications where there’s a need to simulate real world physics with real world photo realism (to train self driving cars, to create digital twins for factories), NVIDIA created the Omniverse software platform

Just as researchers stumbled onto using NVIDIA’s GPUs for early machine learning applications in the turn of the last decade (thanks to NVIDIA’s superior software), the company is attempting to recreate the same luck. The various software tools it recently announced enable researchers and customers to develop applications that use NVIDIA’s chipsets. The grand vision is to target a $100 trillion industry (Figure 6) and sell even more chips to data centers.

This grand vision sure sounds exciting, and the market seems to agree. Of all chip producers competing in the data center space, NVIDIA is trading at the highest multiple (66x next-twelve-month P/E), much higher than Intel (13.7x) and AMD (50x). Both NVIDIA and AMD make GPUs, but the gap in both hardware and software for machine learning and other data center applications between the two remains large. NVIDIA is still the market leader.